VSM Cover Story

Targeting Azure Storage

Learn how to store and retrieve binary large objects in the cloud with Azure's RESTful Storage API.

The collective storm swirling around the nascent cloud computing market shows no signs of letting up. Google the phrase "cloud computing" and the search returns more than 34 million hits.

Cloud computing's claim to developer mindshare is the promise of an Internet-accessible virtual computing and storage environment hosted on connected servers in third-party data centers. Cloud infrastructure services can help enterprises bypass the capital expense of on-site hardware, software licenses, networking and data storage with a pay-for-usage model-per hour or gigabyte-for off-site resources.

Microsoft's potential ability to leverage its Visual Studio developer cadres to gain a foothold in the cloud computing market is unsettling to existing and prospective players. Months of unrelenting hype have followed Microsoft Chief Software Architect Ray Ozzie's unveiling of the Windows Azure cloud computing platform during Microsoft's Professional Developers Conference 2008. After about a year of developer feedback, Microsoft is expected to announce the date for commercial release of Windows Azure and the Azure Services Platform at PDC 2009 on Nov. 17.

Amazon Web Services LLC and the Google App Engine have a commanding presence as early infrastructure and Platform as a Service (PaaS) providers. IBM Corp., VMware Inc. and even Oracle Corp.-with its pending Sun Microsystems Inc. acquisition-are among the companies playing a furious game of catch-up to prevent Amazon Web Services, Google Inc. and Microsoft from becoming the enterprise cloud's ruling triumvirate.

The initial appeal of cloud-or "utility"-computing is the ability to rapidly scale application capacity as fluctuating demand grows and, just as quickly, to shed resources as traffic subsides. In 2009's tight economy, cloud computing offers a way to reduce the risk of testing Web-based projects that require substantial resources for a minimum implementation. At a growing number of companies, management's quest for "green IT" status may soon validate the increased efficiencies of data center operating scale-efficiencies that can reduce the amount of power consumption and heat generation per unit of computing work and data storage.

Amazon Web Services, Google App Engine and Microsoft Windows Azure all offer these features. However, only Microsoft delivers the unique advantage of minimizing the effort-and thus the cost-required to move .NET projects developed with Visual Studio from on-premises IT facilities to virtualized Windows Server clusters and replicated data storage running on the Windows Azure fabric in Microsoft data centers.

The foundation for the Azure Services Platform is the Windows Azure operating system. This OS provides the fabric for virtualized Windows 2008 Server instances; schemaless persistent table, binary large object (blob) and queue storage; .NET Services for managing authentication/authorization, inter-service communication, workflows, service bus queues and routers; SQL Services for data management with SQL Server's relational database features; and consumer-oriented Live services. Like the Google App Engine, Azure provides a development environment that emulates its cloud OS and storage services on-premises. Cloud-based SQL Analysis Services and SQL Reporting Services are scheduled for post-version 1 release.

Secure and reliable data storage is critical to commercial infrastructure and PaaS offerings. Microsoft announced in late May that its cloud infrastructure had received both Statement of Auditing Standard (SASO 70 Type I and Type II attestations) and the International Organization for Standardization and International Electrotechnical Commission (ISO/IEC) 27001:2005 certification for security.

For developers, integrating replicated data storage with cloud-based Web applications or services improves performance and increases data availability and security, while maintaining scalability for traffic surges. The Azure Services Platform provides four classes of replicated data storage: blobs for unstructured data such as music, pictures and video files up to 50GB; tables for structured data; queues for services and messaging; and relational data. (See "Retire Your Data Center," February 2009, for an overview of Azure's layered architecture and structured table storage, which uses the entity-attribute-value data model.)

Move ASP.NET Apps to the Cloud

Visual Studio 2008, ASP.NET 3.5 Service Pack 1 (SP1) and the Azure Services Platform simplify uploading blobs to scalable, replicated storage in virtual server clusters at Microsoft data centers. Azure blobs offer a simple interface to store unstructured binary data and metadata that corresponds to files in a directory structure. Developers can quickly learn how to create, store and consume blobs of varying sizes with a simple Azure ASP.NET Web application called a WebRole.

The top of Azure's object pyramid is the Account, which is the unit of hosted application and storage ownership, resource consumption accounting and billing. Microsoft hasn't disclosed pricing or Service Level Agreement (SLA) details. The Azure team expects to publish pricing and SLA commitments later this summer and to deliver the Azure Services Platform version 1 as a commercial service by the end of 2009.

If you don't have a token for an Azure-hosted service account-which includes two storage services accounts-you can emulate Azure by downloading and installing the Windows Azure software development kit (SDK) community technology preview (CTP), which requires .NET 3.5 SP1 and Windows Vista. The SDK lets you run ASP.NET Web application code in the local Development Fabric and store blobs, tables and queues in Development Storage-which, by default, is a local SQL Server 2005 or 2008 Express instance.

One of Azure's best features is the ability to develop and debug projects with Visual Studio 2008 in the development environment. Download the Windows Azure Tools for Microsoft Visual Studio CTP to provide templates for cloud-based projects. Visual Studio 2008 is recommended for now. The Windows Azure Tools for Visual Studio May 2009 CTP supports Visual Studio 2010 beta 1. However, the Windows Azure OS doesn't work with .NET 4.0 yet, and the .NET Services CTP-due in March 2009 at press time-doesn't support Visual Studio 2010. Installing the Windows Azure Training Kit is optional but recommended for its hands-on labs.

Using the Azure Services Developer Portal, you can quickly and easily publish your apps to Azure's staging environment for testing with the Azure fabric and cloud-based storage. After you verify operability with a GUID as the service name, a single click exchanges the staging version for the production service, if any exists. The only change you must make to your project code to move from the development environment to the Azure Fabric is to comment-out and add a few lines to the ServiceConfiguration.cscfg file (see Listing 1). You can perform the edits in the Azure Services Developer Portal's Configuration page if you prefer.

Register for a cloud-hosted service account token; the service provisioning process is Byzantine and changes often. The wait is usually only a day or two, down from weeks for early CTPs. Click the Register for Azure Services link to open Microsoft Connect's Applying to the Azure Services Invitations Program page. Complete the survey, click Submit, and wait for the token to arrive in the e-mail account for the Windows LiveID you presented.

When you receive the token-which gives you 2,000 virtual machine (VM) hours, 50GB of cloud storage and 20GB per day of storage traffic at no charge-go to the Azure Services Developer Portal. Sign in with the same LiveID, click the I Agree button to create a new developer portal account, and click Continue to open the Redeem Your Invitation Token page. (Alternately, click the Account tab and the Manage My Tokens link.) Copy the token product key to the Text box and click the New Project link or Claim Token and Continue buttons to open the Project | Create a New Service Component page. Click the Hosted Services button to open the Create a Project: Project Properties page, type a Project Label and optionally fill in the Description text boxes and click next. Type a unique DNS name for your hosted service in the Service Name text box and click Check Availability to verify that no one has used it previously.

Microsoft enabled Azure's geo-location service in late April, so you can now specify an Affinity Group to ensure that hosted-service code and your storage account reside in the same data center to maximize performance. To date, only the United States-based data centers-USA-Northwest (Quincy, Wash.) and USA-Southwest (San Antonio, Texas)-are in operation. Select Yes, This Service Is Related to Some of My Other Hosted Services ... and Create a New Affinity Group option buttons, give the affinity group a name, such as USA-NW1, and choose one of the two current data centers from the Region list. Click the Create button to create the new hosted service and open the Create a Service: Hosted Project with Affinity Group page.

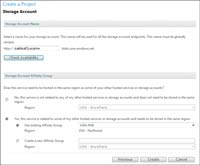

Each hosted-service token entitles you to two storage services accounts, which share a similar setup page. To add a storage service account, click the New Project link to reopen the Project | Create a New Service Component page, and Click the Storage Account button. Add a storage Project Label and Description, and click Next to open the Create a Project: Storage Account page. Type a DNS name for the account and select the Yes, This Service Is Related to Some of My Other Hosted Services ... and Existing Affinity Group option buttons and select your Affinity Group name in the list (see Figure 1).

[Click on image for larger view.] |

Figure 1. Assign Your Storage Services to the Hosted Service's Affinity Group

Microsoft added the Affinity groups feature to Azure in the March 2009 CTP. Affinity groups let you specify one Microsoft data center to hold your cloud application (WebRole or WorkerRole) and its data. Keeping the data close to the code in the same geographical location eliminates performance hits from network hops. |

You'll also need to download and install the Windows Azure SDK to provide the local Developer Fabric and Storage services, as well as the StorageClient sample C# class library, which enables manipulating blobs as .NET objects. The Windows Azure SDK May 2009 CTP adds new features to Azure table and blob storage, but the CTP's .NET StorageClient library API doesn't support them. You'll need an updated version of StorageClient to take advantage of new CopyBlob and GetBlockList features for blobs.

PHP developers can create a native interface for Azure storage accounts using the PHP SDK for Windows Azure CTP, which Belgium integrator RealDolmen and project sponsor Microsoft made available in May on Microsoft's open source community hosting site CodePlex. The PHP SDK provides PHP classes for Windows Azure blobs, tables and queues. The May CTP, which is the first preview, is targeted at blob storage.

Create Containers, Blobs and Blocks

Containers hold multiple blobs, emulating the way a folder contains files. Containers are scoped to accounts and, unlike other Azure storage types, can be designated publicly read-only or private when you create the container with Representational State Transfer (REST) requests or .NET code. Private containers require a 256-bit (SHA256) AccountSharedKey authorization string, which you copy from the Primary or Secondary Access Key fields on the storage-service-name Summary page. You can add up to 8KB of metadata to a container in the form of attribute/value pairs. Azure storage offers a REST API, so anyone who knows the Uniform Resource Identifier (URI) for your public storage service, http://storage-service-name.blob.core.windows.net, can list its containers with a simple HTTP GET request, such as http://oakleaf2.blob.core.windows.net/?comp=list. Returning a list of blobs in the oakleafblobs container uses a similar syntax with the blob name prefixed to the query string: http://oakleaf2.blob.core.win dows.net/oakleafblobs?comp=list. The Fiddler2 Web Debugging Proxy's Request Builder makes it easy to write and execute RESTful HTTP requests.

The maximum size of an Azure blob is 50GB in the May 2009 CTP, but the largest blob you can upload in a single PUT operation is 64MB. Creating larger blobs requires uploading and appending blocks, which can range up to 4MB in size. To handle interrupted uploads, each block has a sequence number so blocks can be added in any order. You can update blobs by uploading new blocks, which overwrite earlier versions. Azure replicates all blob data at least three times for durability. Strong consistency ensures that a blob is accessible immediately after uploading or altering it. Blobs are scoped to containers, so a GET request to return a specific graphic blob from a public oakleafblobs container is http://oakleaf2.blob.core.windows.net/oakleafblobs/blobname.png.

HTTP REST requests use PUT BlobURI to insert a new blob or overwrite an existing blob named BlobURI, which uses the same syntax as GET requests. GET BlobURI can return a range of bytes with the standard HTTP GET operation with a Content-Range entity-header. The REST API also supports HEAD requests to determine the existence of a container or blob without returning its payload. DELETE requests use the same URIs as PUT, GET and HEAD. PUT BlockURI, GET BlockListURI, and PUT BlockListURI use a similar addressing scheme. The Windows Azure SDK's "Blob Storage API" section contains more details about the REST API for Blob Storage.

Objectify Blobs and Containers with StorageClient

Writing the code to send HTTP request and process response messages directly isn't straightforward for most .NET developers. Fortunately, the Azure team provides a sample C# StorageClient class library that enables writing .NET code to manipulate containers and blobs in a more traditional object-oriented style. When you add a reference to StorageClient.dll, you gain access to the Microsoft.Samples.ServiceHosting.Sto rageClient namespace, which includes BlobStorage (account) and BlobContainer abstract classes, as well as Blob Contents, Blob Properties and other classes in BlobStorage.cs. Classes in RestBlobStorage.cs handle translation from .NET method calls to RESTful HTTP requests; helper methods contain code to create blobs from blocks.

The sample code for the AzureBlobTest.sln solution shows you how to write a WebRole application to create storage account and container objects (see Listing 2), upload blobs from online storage in Windows Live SkyDrive or your test computer's file system (see Listing 3) to the container, and then display selected graphic blob content in a new browser window. All event-handling code is contained in the AzureBlobTest WebRole project's Default.aspx.cs code-behind file. FileEntry.cs defines the FileEntry class for uploading local files. The StorageClient library includes class diagrams for BlobStorage.cs and RestBlobStorage.cs class files.

Install the Windows Azure SDK CTP and the Windows Azure Tools for Microsoft Visual Studio CTP, download the sample code (see Go Online for details), read the Readme.txt file, and then unzip the files to a \AzureBlobText folder. AzureBlobTest.sln connects to the oakleaf2store Storage account by default, but it's easy to change to DeveloperStorage by editing the ServiceConfiguration.cscfg file.

After you redeem your hosted-service token, follow the instructions in the Readme.txt file to publish the project to your own hosted application and storage services in the cloud.

After you try it out for yourself, you'll likely agree that Azure is the easiest and quickest way for .NET developers to experience the enterprise platform of the future: cloud computing with Windows.